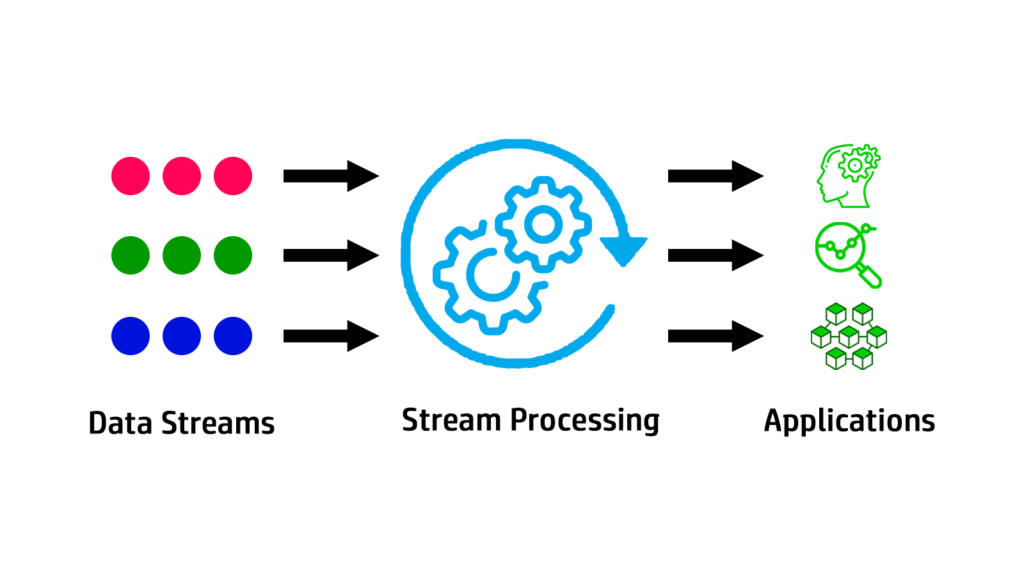

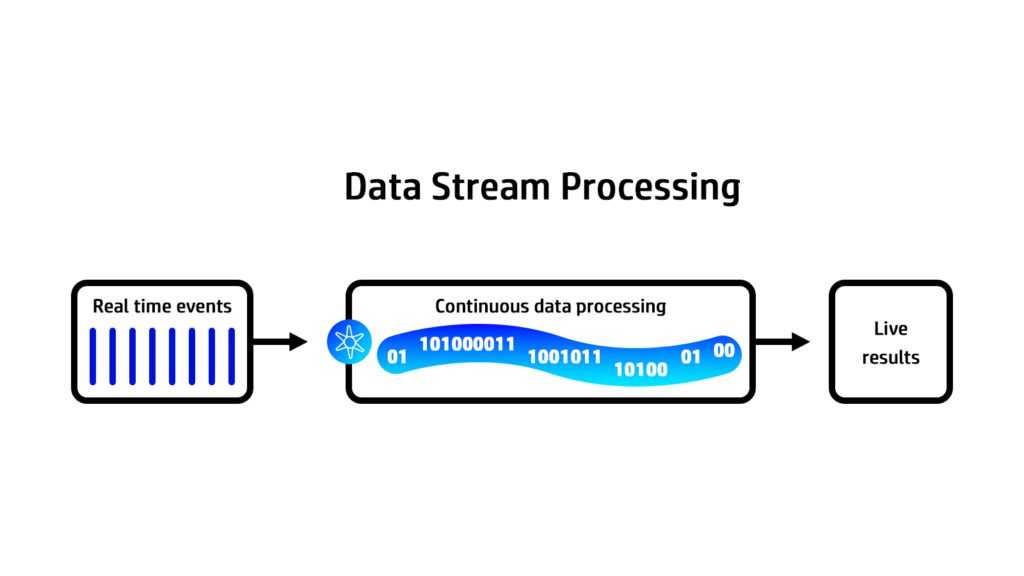

The term "streaming data" refers to data that is produced continuously by tens of thousands of data sources. A data stream consists of a series of data elements ordered in time. The data represents an “event” or a change in state that has occurred in the business and is useful for the business to know about and analyze, often in real time. The term "streaming data" refers to a wide range of information, including log files produced by users of your mobile or web applications, e-commerce purchases, in-game player activity, data from social networks, trading floors for financial instruments, or geospatial services, as well as telemetry from connected devices or instrumentation in data centers. One way to think of a data stream is as an unending conveyor belt that continuously feeds data elements into a data processor.

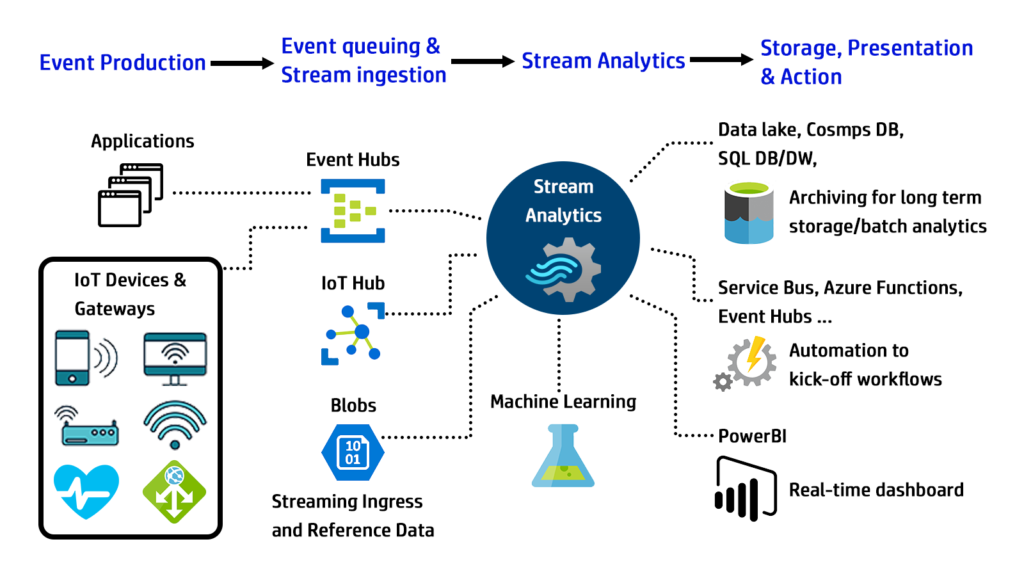

The importance of data streaming and stream processing has grown as a result of the development of the Internet of Things (IoT) and rising customer expectations. Two examples of data streaming sources are home security systems and personal health monitors. Multiple motion sensors are used in a home security system to keep an eye on various rooms in the house. These sensors produce a stream of data that is continuously supplied to a processing infrastructure that either monitors any unusual behavior in real time or stores the data for subsequent analysis of more difficult-to-detect patterns. Another type of data streaming source is a health monitor, which may include a heartbeat, blood pressure, or oxygen monitor. These gadgets produce data all the time. The individual's safety could depend on the timely study of this data.

General Characteristics of Data Streams

Streaming data differs from traditional, historical data in a number of ways and comes through sensors, web browsers, and other monitoring systems. These are a few of the main traits of stream data:

-Time Sensitive

A data stream has a timestamp for each component. The data streams have a limited shelf life and become irrelevant after a while. For instance, to remain relevant, data from a home security system that shows a suspicious movement should be examined and treated quickly.

- Continuous or Unbounded nature

There is no beginning or end to streaming data. Data streams are continuous and happen in real-time, but they aren’t always acted upon at the moment, depending on system requirements.

- High velocity

Data streams are generated at high speeds and require real-time processing, often measured in milliseconds. This high velocity requires special processing techniques such as stream processing to be able to keep up with the data.

- Heterogeneous

The stream data often originates from thousands of different sources that can be geographically distant. Due to the disparity in the sources, the stream data might be a mix of different formats.

- Imperfect

A data stream may contain corrupted or missing data elements as a result of the variety of their sources and various data transmission technologies.

- Volatile and Unrepeatable

As data streaming happens in real time, repeated transmission of a stream is quite difficult. While there are provisions for retransmission, the new data may not be the same as the last one. This makes the data streams highly volatile. However, many modern systems keep a record of their data streams so even if you can’t access it at the moment, you can still analyze it later.

Types of Data Streams

- Event Streams

The term "event stream" refers to a continuous flow of real-time event data produced by various sources, including Internet of Things (IoT) devices, transactional systems, and customer interactions. Real-time data processing is needed to extract insights from event streams that can guide organizations in making decisions. Customer contacts, market trends, and home security systems are a few examples of event streams.

- Log Streams

Log streams are generated by internal IT systems or applications, which produce log files that contain information about various events such as system errors, security logs, and troubleshooting systems. Log streams require processing tools to extract meaningful insights and enable organizations to monitor their system components' performance, detect faults, and improve system efficiency.

- Sensor Streams

Sensor streams are generated by various sensors that continuously collect data on different aspects, such as location data, temperature, and inventory management. Sensor streams require real-time data processing to enable organizations to make informed decisions on how they operate and improve the efficiency of their business processes.

- Social Media Streams

Social media streams are generated by social media feeds that produce continuous data on users' preferences and interactions. Social media streams require processing tools to extract meaningful insights and enable organizations to improve their customer interactions, detect market trends, and build brand awareness.

- Click Streams

Click streams are generated by users' interactions with web applications, such as web browsers or mobile applications, and provide data on their behavior, preferences, and interests. Click streams provide insights into customer behavior, preferences, and interests to improve user experiences and increase sales.

Applications of Data Streams

- Real-time Analytics: Data streams are used for real-time monitoring and analysis of data. Businesses can gain insights into customer behavior, market trends, and operational performance by analyzing incoming data as it arrives. This enables timely decision-making and quick responses to changing conditions.

- Fraud Detection: In the financial industry, data streams can be employed to detect fraudulent activities in real-time. By analyzing patterns and anomalies in transactions, banks and credit card companies can identify and prevent unauthorized or suspicious transactions.

- Network Monitoring: Data streams are essential for monitoring network traffic in real-time. This is crucial for detecting and mitigating security threats, performance issues, and anomalies in computer networks.

- IoT (Internet of Things) Applications: IoT devices generate continuous streams of sensor data. These data streams can be utilized to monitor and control various aspects of environments such as smart homes, industrial processes, agriculture, and healthcare.

- Social Media Analysis: Data streams from social media platforms provide insights into public sentiment, trending topics, and user interactions. This information can be used for brand monitoring, marketing campaigns, and reputation management.

- Online Advertising: Advertisers can use data streams to personalize and optimize ad placements in real-time. By analyzing user behavior and preferences, they can deliver targeted ads to the right audience at the right time.

- Energy Management: Data streams from energy meters and sensors can be employed to monitor and manage energy consumption in buildings and industrial facilities. This helps in optimizing energy usage and reducing costs.

- Healthcare Monitoring: Wearable devices and medical sensors generate continuous streams of patient data. Healthcare professionals can monitor vital signs, track health conditions, and provide timely interventions based on real-time data.

- Environmental Monitoring: Data streams from environmental sensors can be used to monitor air quality, water quality, weather conditions, and other environmental parameters. This information is valuable for research, regulatory compliance, and disaster management.

- Supply Chain Management: Data streams can help track the movement of goods and materials in supply chains. Real-time monitoring allows for better inventory management, demand forecasting, and logistics optimization.

- Recommendation Systems: Streaming data on user preferences and behavior can be utilized to improve recommendation systems for content, products, and services. Online platforms can suggest relevant items based on ongoing user interactions.

- Traffic Management: Data streams from traffic cameras, sensors, and GPS devices can be analyzed to manage traffic flow, optimize routes, and provide real-time traffic updates to drivers.

Benefits of Data Streaming for Businesses

Data streaming provides several benefits for businesses, including the ability to process data instantaneously, make faster decisions, and derive valuable insights that can enhance business outcomes. With streaming data, businesses can continuously collect and process data from various sources, including social media feeds, customer interactions, and market trends, to name a few.

Additionally, data streaming gives companies the ability to automate tasks like inventory management or information security based on real-time insights, which helps them operate their operations more efficiently. By analyzing data as it is received, it also aids in predictive analytics, enabling firms to make predictions based on both recent and past data.

Data streaming can also assist businesses with fault tolerance, ensuring that their systems keep running even if some of the individual components fail. Businesses may monitor logs and conduct real-time problem-solving with low latency and continuous data flow. Overall, data streaming offers firms an effective means to gather knowledge, enhance decision-making, and gain a competitive edge.

Challenges in Processing Data Streams

Processing data streams comes with several unique challenges due to the continuous and high-velocity nature of the data. Here are some of the key challenges associated with data streaming processing:

- Velocity and Volume: Data streams can produce vast amounts of data at high velocities. Managing and processing this constant flow of data in real-time can strain processing and storage systems, requiring scalable and efficient architectures.

- Latency and Real-Time Processing: Real-time processing is a fundamental requirement for data streams. Analyzing and deriving insights from data as it arrives necessitates low latency to enable timely decision-making. Delays or bottlenecks in processing can lead to missed opportunities or compromised outcomes.

- Data Order and Integrity: Maintaining the order of incoming data is crucial in many applications. Out-of-order data arrival can impact the accuracy of analysis and result in incorrect conclusions. Ensuring data integrity and handling unordered data is a significant challenge.

- Complex Event Processing: Many data stream applications involve detecting and responding to complex events or patterns in real-time. Developing algorithms and techniques for detecting these events amidst a continuous stream of data is challenging and requires sophisticated processing.

- Scalability and Resource Management: As data volumes and velocities increase, traditional processing systems may struggle to scale to handle the load. Ensuring the efficient allocation and management of computational resources becomes vital to prevent performance degradation.

- Data Quality and Cleansing: Incoming data streams might contain noise, outliers, duplicates, or missing values. Pre-processing and cleansing the data while maintaining real-time processing speed can be demanding.

- Integration with Batch Processing: Many organizations have existing batch processing systems. Integrating real-time data streaming processing with these systems while maintaining consistency and accuracy can be complex.

- Fault Tolerance and Recovery: Due to the continuous nature of data streams, failures in the processing pipeline can occur. Implementing fault-tolerant mechanisms and ensuring the ability to recover from failures without interrupting the stream is critical.

- Privacy and Security: Real-time data processing involves handling sensitive information in some cases. Ensuring data privacy, compliance with regulations, and protecting against security breaches are ongoing challenges.

- Resource Constraints: In edge computing scenarios or with resource-constrained devices, processing data streams efficiently while considering limited computational and memory resources becomes a challenge.

- Algorithm Adaptation: Traditional batch processing algorithms may not directly apply to data stream processing. Adapting or developing new algorithms that can operate in real-time and handle evolving data distributions is necessary.

- Monitoring and Visualization: Monitoring the health and performance of a data stream processing system, as well as visualizing real-time insights, requires specialized tools and techniques.

- Cost and Infrastructure: Deploying and maintaining the infrastructure required for real-time data streaming processing can be costly. Balancing performance, scalability, and cost-effectiveness is a continual challenge.

Addressing these challenges often requires a combination of specialized technologies, such as stream processing frameworks (e.g., Apache Kafka, Apache Flink, Apache Spark Streaming), efficient algorithms, distributed computing principles, and robust architecture design. Organizations must carefully consider these challenges when implementing data stream processing solutions to ensure accurate, timely, and meaningful insights from their streaming data.

Some quick Questions & answers to clarify your doubts:

- What is streaming data?

- Answer: Streaming data is continuously generated data that is transmitted in real-time and can be processed and analyzed while it is still being created.

- What are some examples of sources that generate streaming data?

- Answer: Examples include social media feeds, sensor data from IoT devices, stock market tick data, website clickstreams, and live video/audio feeds.

- What is the main characteristic of streaming data processing?

- Answer: The main characteristic is the ability to process data continuously and in real-time as it arrives, without the need to store it first.

- What is the difference between streaming data and batch processing?

- Answer: Streaming data is processed continuously and in real-time as it arrives, while batch processing involves collecting and processing data in discrete chunks or batches.

- What is meant by "event time" and "processing time" in streaming data processing?

- Answer: Event time refers to the time when an event actually occurred, while processing time refers to the time when the system processes that event.

- What are some challenges associated with processing streaming data?

- Answer: Challenges include dealing with high data volumes, handling data velocity, ensuring low latency, managing out-of-order data, and handling fault tolerance.

- What is the role of stream processing frameworks like Apache Kafka, Apache Flink, or Apache Spark Streaming?

- Answer: These frameworks provide tools and APIs for developers to process and analyze streaming data efficiently and reliably.

- What is a Kafka topic?

- Answer: A Kafka topic is a category or feed name to which messages are published by producers and from which messages are consumed by consumers.

- What is a key characteristic of Apache Flink?

- Answer: Apache Flink is known for its low-latency, high-throughput stream processing engine with exactly-once semantics.

- What is a window in stream processing?

- Answer: A window is a finite section of the stream that contains a subset of the data, usually defined by a time or event count boundary.

- How does watermarking help in stream processing?

- Answer: Watermarking is a technique used to track progress in event time, helping to handle late-arriving data and ensuring correctness in windowed computations.

- What is a stateful computation in stream processing?

- Answer: A stateful computation maintains state across multiple events or time windows, allowing for more complex analysis and aggregation.

- How does Apache Kafka ensure fault tolerance?

- Answer: Apache Kafka replicates data across multiple brokers to ensure fault tolerance and durability of messages.

- What is the purpose of stream processing in Internet of Things (IoT) applications?

- Answer: Stream processing in IoT applications enables real-time monitoring, analysis, and decision-making based on data collected from sensors and devices.

- How does stream processing contribute to fraud detection in financial transactions?

- Answer: Stream processing allows financial institutions to analyze transaction data in real-time, detecting patterns and anomalies that may indicate fraudulent activity.

- What is meant by "exactly-once" processing semantics in stream processing?

- Answer: Exactly-once processing ensures that each event is processed and its results are recorded exactly once, without duplication or loss, even in the presence of failures.

- What role does Apache Spark Streaming play in the Apache Spark ecosystem?

- Answer: Apache Spark Streaming provides real-time stream processing capabilities on top of the Apache Spark engine, allowing developers to integrate batch and streaming processing.

- How can stream processing be used in recommendation systems?

- Answer: Stream processing can analyze user interactions and behavior in real-time to provide personalized recommendations, improving user engagement and satisfaction.

- What are some privacy considerations when processing streaming data?

- Answer: Privacy considerations include ensuring compliance with regulations like GDPR, anonymizing sensitive data, and implementing robust security measures to protect data in transit and at rest.

- How does stream processing contribute to real-time analytics in e-commerce?

- Answer: Stream processing enables e-commerce platforms to analyze user behavior, update product recommendations, and detect trends in real-time, improving customer experience and increasing sales.

No Comments